Once the audio and visual halves were both working separately, putting them together was an excellent challenge. My favorite bug was when the audio seemed to be working, but the pendulum display seemed to be jumping around randomly, even though I used the same math in the JavaScript version and it worked.

Solution:

After a long staring contest, and a lot of mashing the 'slow down' button, I figured out that the motion was not random - it was just far too fast. Then, I remembered! Audio processing has to happen much faster than visual processing. Our eye can make due with fewer frames per second than our ear, so I figured it was a reasonable assumption that the visuals were not being processed 44,100 times per second by default. Especially since video processing is computationally expensive.

Instead of updating the physical model of the pendulum on every new audio frame, I changed the code to update the physical model whenever the visuals are redrawn:

case GUI_TIMER_PING:

{

if(m_pWaveFormView)

{

getAcceleration1();

getAcceleration2();

getCoordinates();

getDistances();

updateVelocityAndAccel();

m_pWaveFormView->setCoordinates(x1, y1, x2, y2, harmonic1x, harmonic1y, harmonic2x, harmonic2y, distance1, distance2);

m_pWaveFormView->invalid();

}

return NULL;

}

We calculate the acceleration of pendulum 1 and 2, we get the coordinates of the tip, we calculate the distances from that tip to each harmonic point, we update the velocity and acceleration for the next iteration, and then we draw the pendulum in the WaveFormView object.

The audio is using the same physical model values as the visualizer, it's just polling them more often. This also added the unintended side effect completely planned feature of making the plugin sound much smoother because the pendulum values were not being recalculated at audio rate anymore.

This was a memorable bug because I had to think about it for awhile, but then all I had to do was move those function calls over to the visual callback and there was a startling difference.

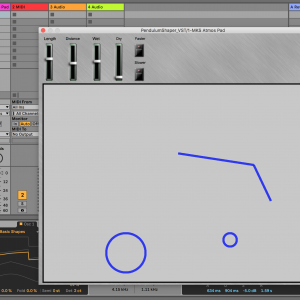

,

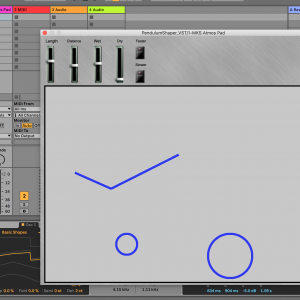

,